Long Polling in .Net

Polling vs Long Polling

Polling

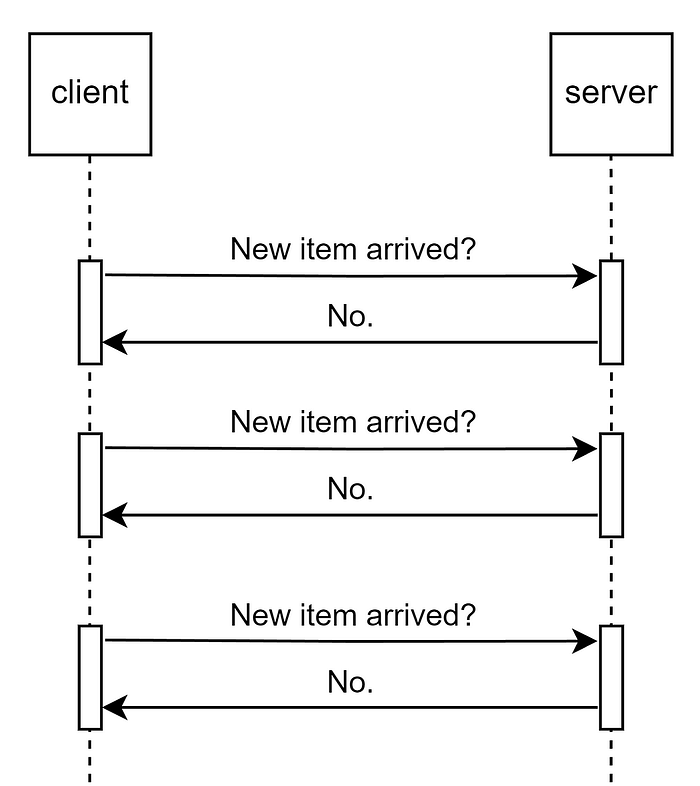

Polling consists of sending requests in set intervals to get updates.

It is the simplest way to implement near-real-time updates using HTTP requests.

The biggest advantage over other options is that the backend doesn’t need to implement anything special for it to work. You can poll any existing HTTP endpoint.

A real-life example is a client polling a server to check if any new items arrived.

Long Polling

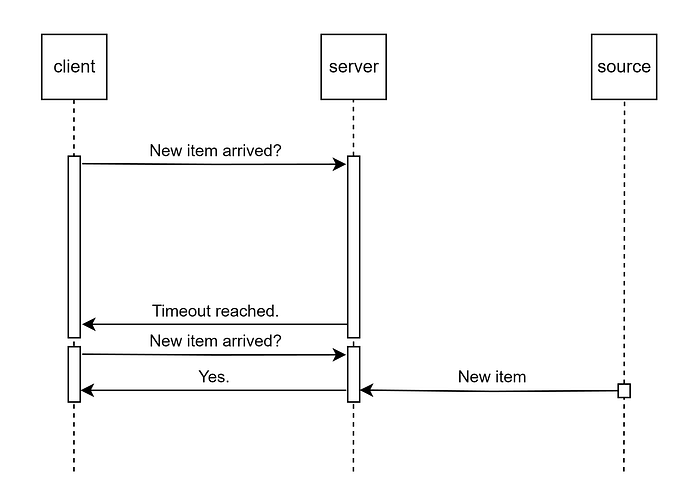

Just like Polling, Long Polling consists of sending requests in set intervals to get updates.

The difference between polling and long polling is that the server keeps the connection open and finishes the request only when there’s an update or the timeout is reached.

This way, fewer resources are wasted on chatty communication between the client and server.

The disadvantage, compared to polling, is that the server needs to implement it. You cannot poll existing HTTP endpoints if the server doesn’t support it.

Long Polling Caveats

There are a few caveats to consider before choosing to use long polling.

Not suited for high-frequency updates

If the updates are more frequent than the polling frequency, long polling will essentially result in a regular poll. This makes it a viable option only in cases where updates are not as frequent.

Browser connection limitations

Most modern browsers have a limit of 5 active connections per domain. All requests beyond this limit will stall. Keep this in mind when opening a long polling request, since stalled connections can slow down your website significantly.

Resource consumption

Depending on your server, it could be more suited for frequent short-lived connections than less frequent long-lived connections.

Implementing Long Polling in .NET

Let’s implement a simple long polling endpoint in .NET 7.

As you can see, the code is pretty simple. We create a linked Cancellation Token Source so that the loop can be canceled by the user, as well as the timeout.

We set up a 30-second timeout.

While the cancellation is not requested, we check if there’s a new item. If there is, we return the item with a 200 Ok result and complete the request.

On timeout, we return a 204 No Content result to let the client know the request timed out and they should send a new one.

A more efficient implementation

This implementation is fine if the ItemService does an in-memory check, such as checking a message buffer in a chat application.

However, most production apps persist data in a database. In this case, the ItemService should be a Singleton service that provides a Task Completion Source that could get triggered from a separate process, eg. an event handler or a call from another service.

In this implementation, we do the same as before, except, instead of waiting in a while loop, we wait for either the itemArrivedTask or a new Task with our cancellation token to complete.

When either of them is completed, we return a result based on which one it is.

Before returning a new item, we make sure to reset the Task Completion Source so it can be reused — remember, the service is a Singleton.

Conclusion

I have shown you how to implement a more efficient polling technique in .NET.

Even though this is a really niche technique and not used very often, it is important for every backend engineer to be familiar with it.

Fun fact: SignalR uses Long Polling as the last fallback!